The Next Leap in Self-Driving: Prediction

Why prediction has overtaken perception as the biggest challenge in the field

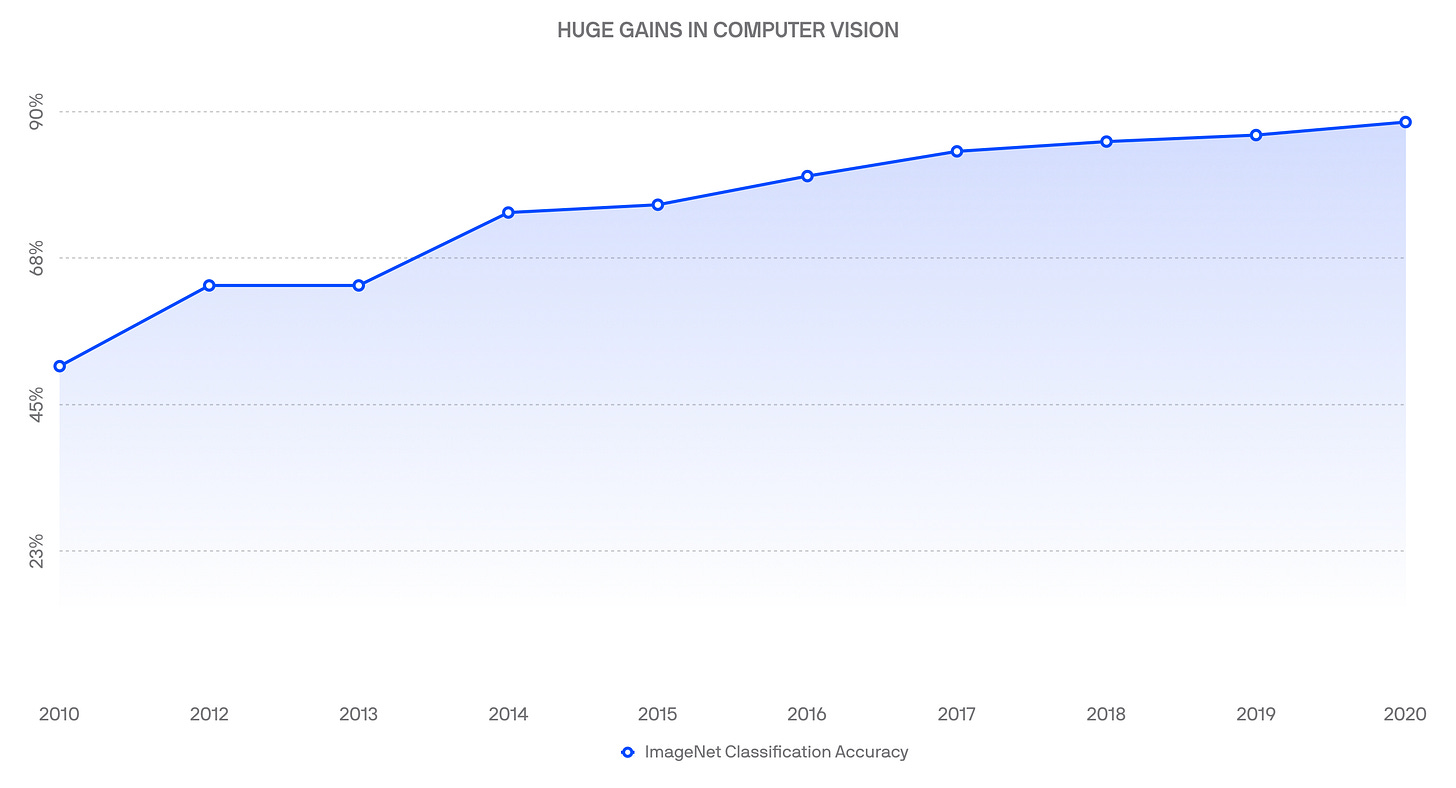

For the last decade, the majority of the conversation within the self-driving machine learning community has focused on object detection. How can we improve the ability of self-driving cars to detect and track all of the dynamic objects critical to safe navigation? In 2010, before Deep Learning became commonplace, perception stood as the primary limitation on the capabilities of self-driving cars. It was not acceptable for a 3-ton machine to have such a high rate of false positives and negatives. This is best exemplified by ImageNet classification accuracy, where the state-of-the-art solution achieved just 50% accuracy in 2010 (compared to 88% today). Although ImageNet classification is not an apples-to-apples comparison to the state-of-the-art in object detection, it does serve as a proxy to progress in computer vision.

Two years later in 2012, AlexNet was one of the first entrants to the ImageNet competition to utilize Deep Learning with Convolutional Neural Networks. AlexNet serves as perhaps the most influential paper in computer vision, after achieving state-of-the-art accuracy on ImageNet in 2012.

Deep Learning, whether applied to lidar, cameras, or radars, began to creep into self-driving technology around 2014. This instance of Google’s self-driving car yielding to a lady in a wheelchair chasing a duck with a broom served as a famous example of just how far perception technology had come from 2010.

Today, Deep Learning for perception is common-place within self-driving cars, and we continue to see amazing boosts in performance because of it. In recent years, networks such as VoxelNet, PIXOR, and PointPillars have pushed forward our thinking in computer vision. Although we should by no means assume that robots have achieved perfect perception, the state-of-the-art in computer vision has moved so significantly that it’s arguably now not the primary blocker to commercial deployment of self-driving cars.

Note: I have heavily biased, but the above statement is predicated on a self-driving car having multiple sensor modalities, including a time-of-flight sensor that returns physics-accurate depth information to feed to your perception models. Sorry, Tesla!

Now that Perception is not the burning fire in self-driving, what’s next? Prediction!

The Art of Prediction

Now that we can safely detect the critical objects around us, we must now predict what they are going to do next. Predicting correctly means that we will execute the right maneuver at the right time, taking into consideration the actions of those around us. Predicting incorrectly means we may drive ourselves into a position of danger. As humans, we perform this prediction intuitively using thousands of environmental inputs.

As discussed in my first post on Reinforcement Learning and Imitation Learning for self-driving cars, let’s walk through how a robotic self-driving car may handle an unprotected left turn.

The problem of prediction is central to the most difficult instantiations of the unprotected left turn. A self-driving car must predict the future actions of all the dynamic agents around it before it executes the turn, a task that requires significantly more intelligence than other problems in autonomous driving. Human drivers, albeit not perfect, lean heavily on general intelligence, real-world driving experience, and social cues (such as nudging or hand-signals), to successfully execute unprotected left turns.

Although machines have clear advantages over humans (e.g. 360° long-range vision), traditional prediction within self-driving technology can be, by comparison to humans, quite primitive.

Your perception module outputs a set of object detections (e.g. vehicles, pedestrians) within a certain radius of the self-driving car, which is then fed into your prediction module

Your prediction module uses present (e.g. orientation, speed) and previous observations to generate individual predictions about what each object may do in the next 5 seconds

By feeding all of these individual predictions into an algorithm, a hypothesis can be generated about the safest action the self-driving car can execute

The self-driving begins the prescribed maneuver and re-assesses the decision every 100 milliseconds

You can imagine that this robotic approach results in uncomfortable and potentially dangerous driving behavior, especially in dense, urban environments. Over the last few years, we’ve seen an explosion of experimentation with Deep Learning approaches to prediction. These approaches have the potential of significantly improving the accuracy of predictions, transforming them from robotic to human-like.

Solving these primitive predictions with data-driven approaches is eerily similar to how to Deep Learning supplanted classical perception in the mid-2010s.

Here are some examples of this in action.

Cruise’s Engineering Manager for Perception gave a great talk on how they are approaching learned prediction as a classification problem. I was particularly intrigued by the tooling they’ve built to enable rapid experimentation, in addition to their fleet learning capabilities with auto-labeling of scenarios.

Uber shared their work on DRF-Net, which enhances pedestrian prediction: “Extensive experiments show that our model outperforms several strong baselines, expressing high likelihoods, low error, low entropy and high multimodality. The strong performance of DRF- NET’s discrete predictions is very promising for cost-based and constrained robotic planning.”

Apple published a novel Reinforcement Learning paper titled Worst Cases Policy Gradients: “One of the key challenges for building intelligent systems is developing the capability to make robust and safe sequential decisions in complex environments.”

isee released their work on a learned approach to prediction at CVPR 2019: “This MAT encoding naturally handles scenes with varying numbers of agents, and predicts the trajectories of all agents in the scene with computational complexity that is linear in the number of agents, via a convolutional operation over the MAT.”

While prediction is not yet at the performance it needs to be, it’s clear to me that we will see huge leaps in prediction performance with data-driven approaches, in a very similar fashion to how Deep Learning impacted classical perception. These impending leaps will dramatically improve the decision making of self-driving cars, resulting in safer and smoother rides for passengers.

At Voyage, we’ll soon be sharing our work on prediction. But, until then, the only way to learn more about our approach is to join our amazing Predictions team.